AI, Bots, Chatbots

How to Improve NLU Accuracy : 6 Issues with Chatbot Accuracy and Performance

In this post, we review six ways to improve NLU accuracy (and chatbot performance) and offer recommendations for improvement. Our suggested treatments look at both NLU design and operational data and hopefully leave you with a better understanding of why both are key to continuously improving overall bot accuracy and Conversational AI experiences.

Chatbots are front page news again but this time it’s because of a good chat experience with ChatGPT dominating dinner table conversations, LinkedIn streams and technical discussions at meetups. So how has OpenAI (the company behind ChatGPT and the GPT-3 model) been able to create a near human-like conversation experience and yet most chatbots struggle to meet basic customer expectations?

There are many reasons, including hundreds of millions of dollars in investment by OpenAI, huge teams of data scientists and AI engineers, but most of all, and according to what’s been written about extensively, it’s due to the vast amounts of data used to train the model and drive NLU accuracy, including custom algorithms for feedback and reinforcement learning and incremental model training.

So to be clear, this article is not suggesting that we can get your NLU chatbot to be as “good” as ChatGPT – but the techniques we discuss will help improve your current enterprise solution and deliver a better return on the investments that have already been made to date in this technology.

In a prior post, we covered four primary reasons for chatbot failure and offered advice on how to build better conversational experiences, faster, with a focus on conversational design, better use case definition, key operational considerations and central conversational AI platform architecture. In this post we focus specifically on chatbot accuracy from the perspective of the NLU and how business can help influence accuracy with insights that offer recommendations for improvement from the perspective of both bot design and the operational side.

Managing NLU Accuracy is Not for the Faint of Heart

Many large businesses have been deploying chatbots for several years now and whether it’s the challenge of managing multiple bots across various business functions, or managing a single mega bot that has grown to a point where its size and complexity make it impossible to manage and improve in an efficient and timely manner, it’s evident that the accuracy and performance challenges faced by business at later stages of Conversational AI maturity are far more complex than those businesses just getting started.

Let’s face it, bots that were deployed years ago have evolved into a spider web of complexity over time thanks to changing business priorities, new bot owners and too many cooks in the kitchen working on bot improvements with little documentation on the company process for handling changes. As new bots were introduced, management of these bots became more nuanced and had to be handled at an individual bot level. Managing an ever growing library of intents and utterances and feeding back learnings for improved training, while preventing regression is challenging as your NLU chatbot ecosystem becomes more complex.

As a result, chatbot accuracy (NLU accuracy) and ultimately customer experience suffers.

Critical questions you should be asking yourself as the owner of a company’s conversational AI or bot strategy are as follows:

What is chatbot accuracy?

The percentage of utterances that return the correct response/action.

Why is chatbot accuracy important?

It impacts user experience, bot performance, and business metrics.

How often is your chatbot giving a helpful and correct answer to the user?

You may be surprised to learn that it could be less than half the time (if you even have the analytics to figure this out to begin with).

Improving Bot Accuracy is a Manual, Time Consuming and Costly Endeavor

Experienced teams understand that improving bot accuracy is an expensive and time consuming task that is highly manual, frustrating and generally involves a team of experienced and expensive data scientists and AI engineers, who are often difficult to find, hire and retain.

Outside of performing the aforementioned task manually, the solutions currently available for helping product and IT teams optimize NLU accuracy and bot performance across the business have primarily been professional or managed services engagements with vendors who use spreadsheets to structure improvements before handing back to the business who manually imports and updates the bot.

This process takes time and money and results in undesired learning delays – meaning the time it takes to review the data, document updates and upload it back to the NLU can take a matter of weeks or more, meaning the improved customer experience is delayed in reaching production.

Businesses may also choose to leverage bot testing technologies that offer a flavor of testing tools and techniques, either inside their chosen conversational AI platform or 3rd party tools that plug into their platform, to help identify areas for optimization. These tools are often limited and don’t offer integrated and automated treatment paths for applying identified areas of improvement back to the NLU model, leaving the business to handle this manually.

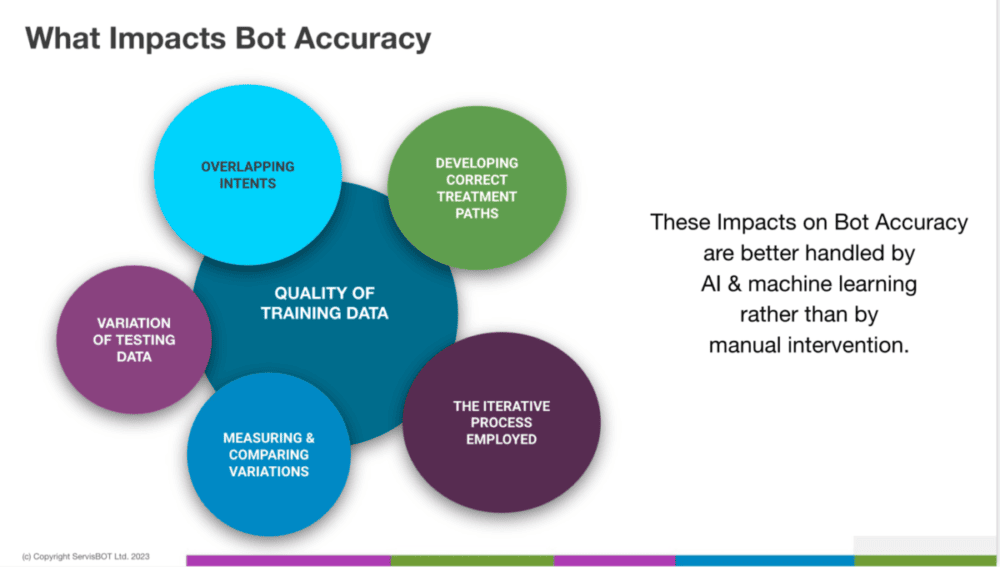

6 issues with NLU accuracy leading to average customer experiences

- Quality of Training Data

- Overlapping Intents

- Variations in Testing Data

- Measuring and Comparing Versions

- Developing Correct Treatment Paths

- The Iterative Process Involved

1.Quality of training data

NLU accuracy is directly proportional to the quality of your training data. When building a NLU chatbot the quality of the training data matters more than the volume of training data. In other words, large volumes of wrong training data can be just as problematic as too little training data. Poor quality training data introduces confusion and overlap with other intents and makes the task of identifying the right type of training data very difficult.

“The purpose of data is to extract meaningful information from it; e.g., to train machine learning models. High-quality data leads to high-quality information. Conversely, bad data leads to a significant deviation from the intended result.The problem with low-quality data is that it still produces results. These results, though wrong, merely appear as “different” to those obtained from high-quality data. This leads to poor decision making and sometimes even economic losses for the business.” Source: sweetcode.io

Recommendation: Ensure your chatbot and conversational AI solutions have an integrated mechanism to test and measure quality of your training data, continuously, suggesting areas for improvement and providing tooling to improve the quality of your training data on an ongoing basis.

2. Overlapping intents

When building intents that have to respond to a wide variety of customer related queries, it becomes difficult to avoid overlapping intents,* where utterances are undoubtedly similar across intents. Whether it’s due to the training data used in the initial design, or whether the overlap is created through the process of adding missed inputs to existing intents or creating new intents on-the-fly, the problem often compounds over time and becomes difficult to untangle.

“These overlaps are really conflicts, which can exist between two, three or more separate intents. Once your chatbot grows in the number of intents, and also the number of examples for each intent, finding overlaps or conflicts become harder.” Source: Cobus Greyling

Recommendation: Use a conversational AI solution that integrates semantic detection to identify true overlap within and between your bots, highlights each confusion point and provides an automated treatment path.

3. Variations in testing data

Perhaps the most significant factor when it comes to testing a bot, is the challenge of creating wide variations of training data in sufficient quantities so as to replicate real world scenarios. It is even harder for a developer to come up with independent testing data for their own design because there is often a built-in bias. This is often why there is a discrepancy between the accuracy of a bot measured in the testing phase, and the accuracy in the real-world deployment.

“The goal of a good machine learning model is to generalize well from the training data to any data from the problem domain. There is a terminology used in machine learning when we talk about how well a machine learning model learns and generalizes to new data, namely overfitting and underfitting.

Overfitting and underfitting are the two biggest causes for poor performance of machine learning algorithms.Overfitting happens when a model learns the detail and noise in the training data to the extent that it negatively impacts the performance of the model on new data. Underfitting refers to a model that can neither model the training data nor generalize to new data. Ideally, you want to select a model at the sweet spot between underfitting and overfitting.” Source: Jason Brownlee

Recommendation: Ensure your conversational AI or chatbot solution has an integrated ability to automatically generate variations from the training data to provide a better test for the model in the real world.

4. Measuring and comparing versions

It’s hard to compare one version of a machine learning model to another as the number of intents grows. Accuracy is the metric most often (over-)used to compare one model to another, but accuracy can be fudged. Other measures are needed to compare, and with these other measures it becomes a difficult task to achieve the right balance and prevent regression in your chatbot.

“Making assumptions about new data based on old data is what machine learning (ML) is all about. The accuracy of those predictions is essentially what determines the quality of any machine-learning algorithm. The most well-known application is classification, and the metric for it is “accuracy.” It should be noted that machine learning model accuracy isn’t the best measure of an ML model, especially when working with class-imbalanced data where there is a huge difference between the positive and negative results. Precision and Recall should be taken into account as well.” Source: Deepchecks.com

Recommendation: Employ a solution that combines a range of chatbot evolution metrics that provide a single view to allow for easy comparison of versions.

5. Developing correct treatment paths

Identifying the problem is a good first step, but the challenge of developing treatment paths to automatically correct the issues is the big hurdle. Current processes often rely on manual tasks to review missed intents, false positives & negatives and come up with new training data e.g. if you have confusion due to overlapping intents it can be mind numbing to look at all the data and decide if you should remove the utterance from one intent or change the phrasing so as to try and eliminate the overlap.

Trying to manually keep track of all the utterances and training data in all the intents and then choosing the most appropriate treatment is something that is only suitable for small and simple models with limited numbers of intents. This is exactly what AI is good at doing – scanning lots of data and identifying patterns and coming up with treatment paths that can include expanding or removing utterances, duplicate removal, data clustering and more.

“Both precision and recall can be improved with high-quality data, as data is the foundation of any machine learning model. The better the data, the more accurate the predictions will be. One way to improve precision is to use data that is more specific to the target variable you are trying to predict.” Source: TowardsDataScience.com

Recommendation: Leverage a solution that automatically identifies design defects and confusion points, and suggests a number of different treatment paths that address the defects within the model and improve the data quality.

6. The iterative process involved

Finding and removing all the points of confusion in a bot with a large number of intents is not a one and done task – it’s an iterative process where you try different treatments and rerun the model to see if it has resolved the issue, only to find the next confusion point which was masked by the previous one. This iterative process of peeling back the confusion is like unraveling a huge ball of spaghetti.

“Optimization is the process where we train the model iteratively that results in a maximum and minimum function evaluation. It is one of the most important phenomena in Machine Learning to get better results.Why do we optimize our machine learning models? We compare the results in every iteration by changing the hyperparameters in each step until we reach the optimum results.” Source:TowardsDataScience.com

Recommendation: Constantly run bot insights against a design until no more treatments can be applied using a tool that is integrated with your conversational AI solution.

The final word

In conclusion, if you don’t have a huge budget for massive computing power, teams of data scientists and AI engineers, don’t worry about trying to replicate ChatGPT. Focus on improving the accuracy of your existing investment using tools that can automatically identify the issues that impact NLU accuracy and performance and deliver better customer experiences. Your customers don’t really want to hold a conversation about philosophy with your bot – they want their query or problem addressed as efficiently as possible. That’s the difference that improved accuracy can make.