AI, Bots

Using MLOps to improve AI Training and Bot Performance

This blog post explores how adopting AI MLOps (or ML Operations) offers a more comprehensive approach of continuous improvement using both design and operational improvements that enhance bot performance and help overcome the challenges of training AI models.

“The way we train AI is fundamentally flawed – the process used to build most of the machine-learning models we use today can’t tell if they will work in the real world or not—and that’s a problem.”

That’s the headline of an article “The way we train AI is fundamentally flawed” from 2020 in MIT Technology Review that lays out why machine learning models often don’t work in the real world. And it’s not just because there is not enough training data. The article looks at many types of AI models from image recognition to Natural Language Processing and approaches it from the data scientist perspective.

Let me tell you about our experience at ServisBOT and how it supports the problems highlighted in the above-mentioned article. To clarify, I am addressing this issue only from the perspective of Conversational AI solutions in high-volume environments and will outline in simple terms, why there is a problem in the real-world use of conversational AI (i.e. chatbot) solutions and provide some ideas about how they can be addressed.

Let’s start with a simple statement…

Improving Bot performance in the real world is difficult, especially in a high volume environment like customer service in a bank, or an insurance company, or a telecoms operator, where the volume of transactions per month can be in the hundreds of thousands or even millions. For example, one of the largest telco operators in the world handles about 10 million contacts per month.

How do you improve Bot performance ?

Well, the traditional way as outlined in the article mentioned above, is to train the bot using classification and missed intent data. The theory was, that the more data you give a bot, the better its performance. But as the MIT article laid out, this is fine in theory but often doesn’t hold up in practice.

For organizations that have deployed bots, this is often a manual process whereby missed utterance data is analyzed by a reviewer who then has to decide whether the missed utterance belongs to something that exists already (and the NLU didn’t infer correctly) or it’s a new request from a customer asking something that the bot doesn’t understand or has not seen before.

Why is improving bot performance difficult ?

A few practical thoughts on why this is difficult.

- The NLU/NLP engine is often a black box to the person doing the reviewing.

- The bot training is most likely a manual and often expensive exercise.

- The volume of data is very large and grows every single day

- Finally, there is a time lag between identifying the problem and putting a change into production that deals with it. We call it the Learning Delay, that has a hidden cost associated with it which we will explain later.

(Register for a 30 day trial of our automated machine learning tool – you upload, we analyze, you review results.)

1.The NLU/NLP is a Black Box

For the average person, the NLU/NLP engine is a black box. We don’t quite know how it works to the point that we can be certain that if I make a change to the bot training model based on a missed utterance, that it will definitely fix the problem.

AI works on statistics and seeing patterns but those patterns interact with other patterns and there is an intricate set of interdependencies that make it difficult for a reviewer to know exactly how to fix a certain topic.

A big problem of this interconnectedness is regression, where something that previously worked may no longer work because of the changes made. This happens frequently in bots that have overlapping intents and where training data is uneven.

Added to this is the positive response bias that AI has built in – we call it bot greediness. The bot wants to answer the question, even though it might not know the answer, and so it doesn’t consider the concept of just giving up – it will most always answer, even if the confidence level is low. That’s why we have to consider confidence levels when evaluating responses, because the bot wants to answer, even if we know that it is wrong.

The traditional answer to these problems was more training data, but this is now being questioned – and we think that there are better ways than more training data for a reviewer to process manually.

2 & 3. Manual Training is Laborious and Volume of Data can be High

In the real-world of practical Conversational AI (or CAI) solutions, the post deployment training is often a manual and laborious task performed by someone who usually is familiar with the topic area but not necessarily familiar with the black box.

In some instances, often in large organizations, this might be a data scientist, but usually it’s someone with a knowledge of the business area who can screen customer requests and determine whether they fall into current capability (i.e. existing intents) or new requirements (i.e. new intents).

The work can be tedious, analyzing customer messages (utterances) that were not classified (missed intents) or incorrectly classified (false positives) and matching them with one of hundreds or thousands of utterances used to train up to a hundred (or possibly several hundred) existing intents.

The volume of data to be processed can be enormous and we have found that there are diminishing returns where manual training will have much of an impact when the volume of data and the number of possible intents are high. People use and understand language in different ways and similarity in words or phrasing can be very subjective, leading to confusion and overlap in the training data. Quite simply, in high volume environments, this work is much better suited to automation (ironically, using AI) rather than manual processing.

4. The Learning Delay

And of course, this all takes time to process.

The time lag between a customer asking something which the bot doesn’t understand and the updating of the production system that can deal with this can often be lengthy – weeks or months. We call this time lag the learning delay i.e. the amount of time before the bot learns how to handle that particular request or learns new skills based upon what customers are asking.

This learning delay has a hidden cost – the number of customers impacted by the same (or similar) issue between the time it is first highlighted (through a missed intent) to the time that the model is updated to be able to handle this. In high volume conversational AI solutions, we have seen rates of 10-30% of missed utterances.

Even if the bot was being updated every month, that’s a lot of potential customers impacted by the learning delay and this will surely have an impact on customer satisfaction.

Summarizing the Problem of Manual AI Training

So to summarize the problem of manual AI bot training we believe it’s a combination of using manual training methods on an NLU/NLP (blackbox) system that might not be well understood by the person doing the training compounded by the volume of data and all of this over time.

The result of this is a loss of trust in the CAI solution, increased costs of trying to maintain and update the bot, and the impact of a learning delay on the customers.

How to Make it Better – MLOps

So, what’s the answer ?

We propose that the answer lies in what Gartner has termed AIOps – AI Operations. It’s the equivalent of dev/ops in the IT world for software workloads and includes a CI/CD process (continuous integration. continuous deployment) for automated learning.

AIOps should be

- A continuous process – much like software is deployed many times in a day rather than once per month or per quarter. With the volume of data to be processed and in order to reduce the learning delay, data should be continuously processed

- An automated process – the process should be automated where possible or as much of it as can be automated. This is the only way to deal with large volumes of data continuously. The irony is that AI has shown to be the most accurate way to process large volumes of data over time – so we need AI to teach our AI to be better. This is known as unsupervised machine learning.

- Secure and configurable – the process needs to be both secure – to avoid customer data leakage – as well as configurable for your business – so you can tap into the business knowledge.

- Regression proof – the process needs to test for and prevent regressions so that the system is always improving and moving forward.

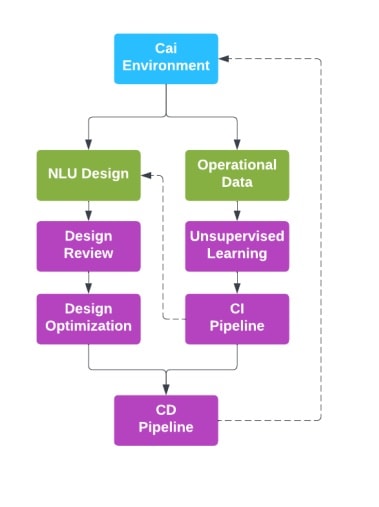

We have developed an automated approach that involves continuously reviewing NLU design as well as operational data in order to provide automatic treatment paths that continually optimize performance while preventing regression.

The CI pipeline involves numerous steps beginning with redaction of PII to prevent data leakage and contamination, data cleansing to remove duplicates and unwanted or inappropriate language (remember the Microsoft Tay chatbot), and de-duplication of data.

The unsupervised learning then does its magic by continually reviewing the classification data and the design parameters to suggest improvements and treatment paths to increase accuracy and performance. A series of regression tests and comparison to previous results ensure that models don’t disimprove before being made available for deployment in the approval and release cycle.

The unsupervised learning module can be applied to any Conversational AI training data covering most major NLP engines and third party products.

In addition to our SaaS offering, it is available as a containerized application that can be deployed in any cloud environment or on any container platform.

To get started, just upload design information and classification data and let it run unsupervised. Please note that integrating into the deployment process requires some custom integration depending on the exact environment and release processes.

What to Measure and the Results using MLOps

So what do we measure and what are the results like?

As mentioned, we look at both design configuration and operational data and these yield different metrics that we can track to prevent regression and improve accuracy.

Insights into the bot design provide the traditional metrics around accuracy, confusion and balance but additional insights provide visibility of overlap and training sufficiency, which are helpful in identifying causes of confusion.

On the operational data, insights provide visibility into false positives, missed intents, treatment rate, intent discovery and regression.Together these provide insight into how improvements can be tracked over time and lead to better bot accuracy and ultimately customer satisfaction.

The treatment rate is a particularly good metric to understand how broadly or narrowly the bot has been trained compared to the types of requests being asked in the real world. We have seen treatment rates in the area of 20-60% with 20% representing a divergence between what the bot is trained on, and what the customers actually want.

Overall we have been able to show a step change improvement to performance through our balanced hybrid approach that combines design reviews & operational recommendations each time through the AIOps pipeline.

Depending on model maturity, we are typically seeing improvements in performance of up to 60% resulting in models in the 80-90% accuracy range after 2 to 4 weeks with sustained and incremental improvements thereafter.

In summary, improving bot accuracy is difficult and adding more data is not the only answer. Rather a more comprehensive approach of continuous improvement using both design and operational improvements using unsupervised learning can provide a way to improve performance, increase customer satisfaction, reduce cost of maintenance, and decrease the learning gap – AI MLOps is the way to go.

Register for a 30 day trial of our NLU optimization/automated machine learning tool – you upload, we analyze, you review results.