AI, Large Language Model

What is Retrieval-Augmented Generation (RAG) and Why is it so Relevant?

Setting the Scene for Retrieval-Augmented Generation (RAG)

Before defining Retrieval-Augmented Generation and all the terms and acronyms that go with it, let’s start with some context for why it is becoming so relevant for businesses as they adopt generative AI solutions.

Although generative AI has multiple benefits it also poses some challenges, particularly for businesses that are especially concerned about inaccurate, biased, or inconsistent responses that it can generate.

Large Language Models (LLMs), despite their advanced capabilities, are prone to these issues primarily because they are trained on vast datasets that can contain biases and inaccuracies. These models can generate responses with very high confidence but that can be incorrect or misleading. Hallucination can also occur where the LLM doesn’t have enough information on how to respond and starts to create rogue responses on its own. The inconsistency in responses, where similar queries yield different answers, can further exacerbate the issue. For most industries, accuracy, compliance, and reliability are important and for some these criteria are non-negotiable.

The stakes are high in enterprise settings, where decisions based on AI-generated insights can impact regulatory compliance, customer trust, market reputation, legal action, and financial outcomes. The risk of embedding bias or inaccuracies into automated processes or making strategic moves based on incorrect AI-generated data is a significant concern. As a result, there’s a cautious approach towards adopting generative AI solutions, with a focus on mitigating these risks. One of the safeguards that business can take is to leverage Retrieval Augmented Generation (RAG) methods when it comes to providing reliable and ring-fenced responses.

RAG Defined

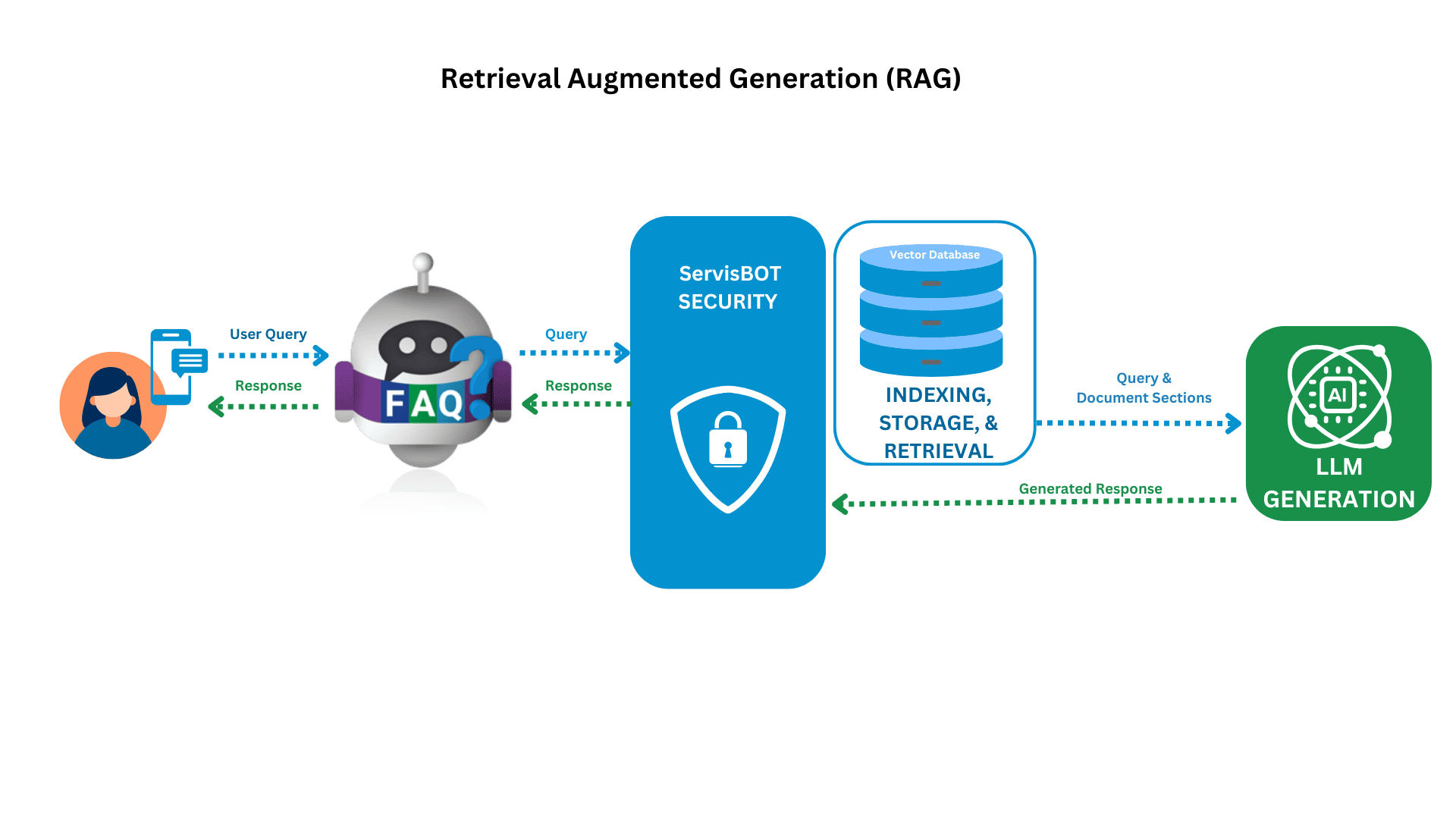

Retrieval-Augmented Generation (RAG) is a technique in the field of Natural Language Processing and Artificial Intelligence that enhances the capabilities of Generative AI technology by integrating the Large Language Model with external information retrieval systems. RAG can utilize a variety of data sources to enhance the performance and accuracy of generative models like LLMs.This enables the LLM to generate better responses because it has more context provided by the information that is sent to it.

RAG is particularly useful in scenarios where a generative model needs to provide up-to-date, factual, or detailed information that is not contained within its training data. For example, it can be used for question answering systems, content creation, and augmenting AI assistants with the ability to pull in real-time data or specific knowledge.

This approach combines the creative and linguistic strengths of generative models with the factual and expansive knowledge base of retrieval systems. It helps in generating more accurate, relevant, and contextually rich responses, easing the concerns of business leaders around adoption of generative AI solutions.

RAG Highlights

RAG combines a neural network-based generative model, like a language model, with a retrieval system. The retrieval system is typically a database such as a knowledge base or a search engine that can fetch relevant information based on the input query or context.

Documents and content, such as web pages, can be indexed in advance using open source tools and stored in a vector database which can then be used to find the appropriate articles or content that may be relevant to answering the query.

When the model receives a query, the retrieval system is first used to find the relevant documents or information snippets that might be helpful to the LLM in responding. This enables the LLM to use the information provided to generate a better answer. The more relevant information that is provided, the lower the risk that the LLM will hallucinate or provide incorrect information.

The retrieved information is then provided as additional context to the generative model. This allows the model to generate responses or content that is informed by the retrieved data. Essentially, the model uses the external information to enhance its understanding and improve the relevance and accuracy of its outputs.

RAG Data Sources

The types of data sources that can be integrated with RAG typically include:

- Databases: Structured data stored in databases can be queried to provide specific, factual information that aids the generative process.

- Knowledge Bases: Specialized knowledge databases which can include relationships between entities and attributes of those entities.

- Document Stores: Collections of textual documents, such as news articles, research papers, corporate documents, or ebooks, that can be indexed and retrieved based on relevance to the input query.

- Images and OCR Content: Integrating RAG with Optical Character Recognition (OCR) and/or image files enables contextual information to be extracted from these content types. This can then be used in generating responses to user queries.

- Web Content: The Internet can be a source of up-to-date information, and web scraping techniques can be used to extract data from web pages, which can then be fed into the RAG system.

- APIs: Various external APIs can provide real-time data or specific pieces of information that are not readily available in local data stores.

- Corporate Repositories: Internal repositories like corporate intranets, CRMs, and ERP systems, often contain valuable proprietary information that can be utilized by RAG systems to generate informed responses.

- Social Media: Data from social media platforms can provide insights into current trends, public opinion, and more.

How ServisBOT RAG Works

1. The ServisBOT RAG Indexing and Storage Process

Key to the performance of RAG is the document indexing and storage process. The efficiency and accuracy of the indexing process directly impacts the performance of the RAG system in generating informed and contextually relevant responses. The indexing process can be outlined in the following key steps, focusing on how data flows and where it is stored.

Collection and Preprocessing of Source Documents

The process begins with the collection of a wide range of data sources. These are generally textual sources but RAG can also be integrated with Optical Character Recognition (OCR) and image files. The purpose is to create a comprehensive database that the RAG system can reference. Often the collected data needs to be cleaned up and prepared before using it. Preprocessing may include removing irrelevant sections, formatting the text for consistency, and handling special characters or encoding issues. This ensures that the data is in a suitable format for further processing.

Tokenization

In this phase, the preprocessed text is broken down into smaller pieces, known as tokens. These tokens can be words, phrases, or other meaningful units of text. Tokenization is crucial for understanding and processing natural language data.

Vectorization

After tokenization, the text is converted into numerical vectors. This process, known as vectorization, involves representing textual tokens in a format that a machine learning model can understand and process. The vectors capture semantic and syntactic information about the tokens.

Indexing and Storage in a Database

The vectorized data is then indexed for efficient retrieval. Indexing involves organizing the data in a way that facilitates quick and relevant retrieval based on query inputs. Once indexed, this data is stored in a vector database or a search engine. The storage solution is typically optimized for fast search and retrieval operations, and it can handle complex queries efficiently.

Maintenance and Updates:

The final ongoing step involves maintaining and updating the indexed database. This includes adding new documents, re-indexing if necessary, and ensuring the database’s integrity and performance. Regular updates help the RAG system stay current with the latest information and knowledge.

Throughout this process, the focus is on creating a robust and searchable database that the RAG system can use to retrieve relevant information in response to specific queries.

ServisBOT uses the latest open source tools for indexing and storing the information in a vector database that is hosted in the ServisBOT platform. Data is stored in an encrypted format in a vector datastore.

2. The ServisBOT RAG Retrieval Process

In the ServisBOT RAG system, the retrieval process is critical for ensuring that the generated responses are informed by relevant and accurate information. The temporary nature of data storage during retrieval ensures that data privacy is maintained, and the system does not retain information beyond what is necessary for generating the response to the query. The ServisBOT RAG retrieval process can be broken down into the following steps:

Receiving and Processing the Query

The process begins when the ServisBOT RAG system receives a user query, e.g. through an AI assistant or agent assist bot. This query is typically a textual input that poses a question or requests information on a particular topic. The query is processed to understand its context and intent, leveraging semantic search capabilities in doing so. This may involve parsing the query, tokenizing it into smaller units, and potentially transforming it into a vectorized form similar to the process used in the indexing phase. A vector database stores information as vectors, which are numerical representations of text that capture the semantic meanings.

Searching the Indexed Database

Using the processed query, the ServisBOT RAG system searches the previously indexed vector database of documents and content. The goal is to find documents or text passages that are most relevant to the query. This involves comparing the query’s vector representation with those in the indexed database.

Retrieving Relevant Documents

The search results in a set of documents or passages that are deemed relevant to the query. The number of documents retrieved can vary, but typically, the system selects the top results based on relevance scores.

The retrieved documents are temporarily stored for further processing. This temporary storage is used to hold the data during the generation phase of the RAG process. This storage typically does not retain data after the process is complete.

Preparation for Generation

The retrieved documents are then used to provide context for the generation phase. The system may further process this data, such as extracting key information or summarizing the content, to make it more useful for generating a coherent and contextually relevant response.

3. The ServisBOT RAG Generation Process

In the ServisBOT RAG system, the generation process is central to producing high-quality, informed responses. It leverages both the context provided by retrieved documents and the nuanced understanding of the user query. The temporary nature of data storage in this phase ensures that the process is both efficient and respectful of data privacy concerns.

The generation process in RAG involves several key steps. This process synthesizes information from retrieved documents to generate a coherent and contextually relevant response.

Integration of Retrieved Data

The process begins with the integration of data retrieved during the retrieval phase. This data, consisting of relevant documents or text snippets, provides the factual and contextual basis for generating a response. The original user query and the contextual information from retrieved documents are merged.

This step ensures that the generation process is grounded in both the user’s intent and the contextual data. ServisBOT passes along the retrieved data and the initial query along with a series of other context and role information to the LLM endpoint.

Contextual Analysis

The system performs a contextual analysis of the retrieved data. This involves understanding the key themes, facts, and details within these documents. The aim is to extract relevant information that aligns with the user’s query.

Response Generation

A generative AI model takes the combined input. I.e. the user query and contextual data, and generates a text response. This step involves complex language modeling, where the AI synthesizes information to produce a coherent and relevant answer or content.

Temporary Storage of Generation Data

During the generation process, intermediate data such as potential responses, processing states, and other relevant information may be temporarily stored. This temporary storage facilitates the iterative process of response generation and refinement.

Output and Data Disposal

The final output is generated as a response to the user’s query, incorporating the insights from the retrieved documents. Post-generation, any temporary data used during the generation process is usually discarded. This is a crucial step to ensure data privacy and minimize unnecessary data retention.

Summary of RAG

Retrieval-Augmented Generation is emerging as an important safeguard for businesses integrating generative AI, addressing challenges such as inaccuracies, biases, and inconsistent responses often found in Large Language Models (LLMs).

RAG enhances LLMs by coupling them with external information retrieval systems, providing additional context and ensuring more accurate, relevant, and contextually rich responses. This technique leverages the linguistic capabilities of generative AI models and the factual knowledge base of retrieval systems to enhance the models understanding and improve the relevance and accuracy of its outputs.

ServisBOT has created a comprehensive RAG technique that involves indexing, storage, retrieval and generation processes that are central to producing high-quality, informed responses. The methodology leverages both the context provided by retrieved documents and the nuanced understanding of the user query while also addressing data privacy concerns. For more information on how we help businesses implement generative AI solutions, especially in sensitive industries like financial services, please contact us.