Introducing an orchestration architecture for businesses that want to expand the capabilities of their digital assistant and chatbot solutions and create a more unified experience across multiple bots that they have deployed.

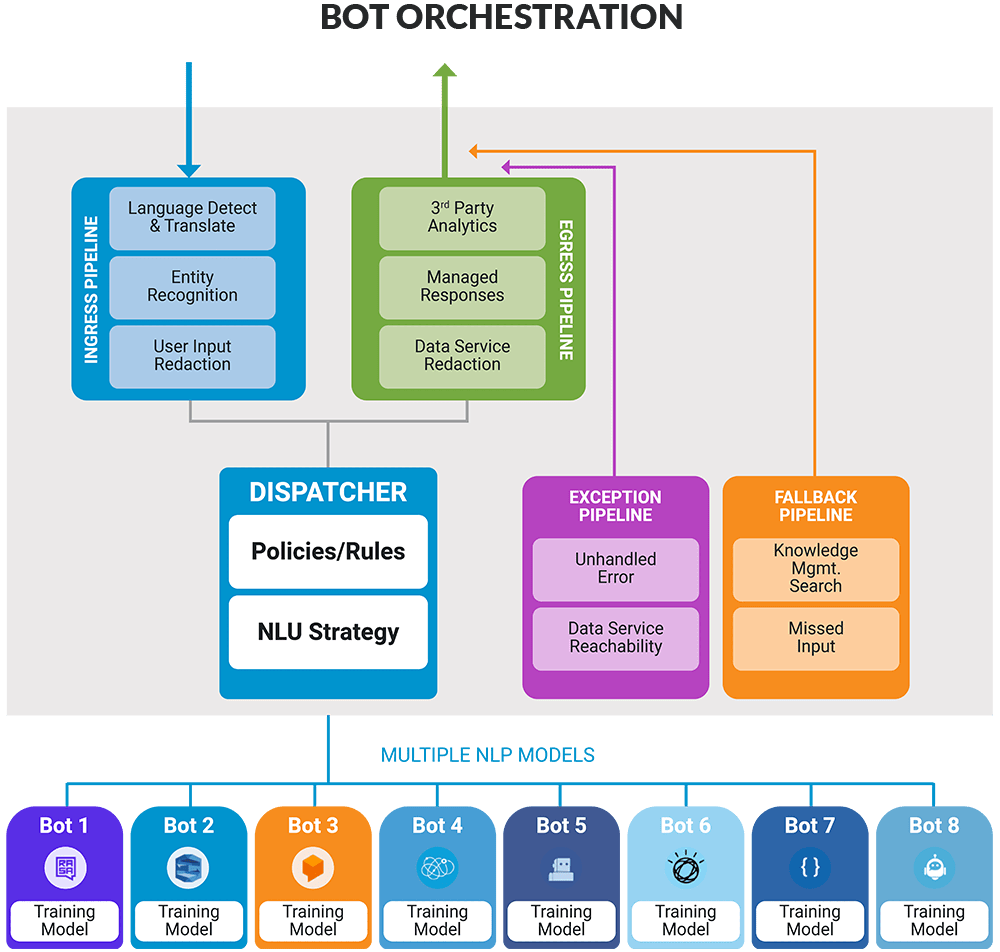

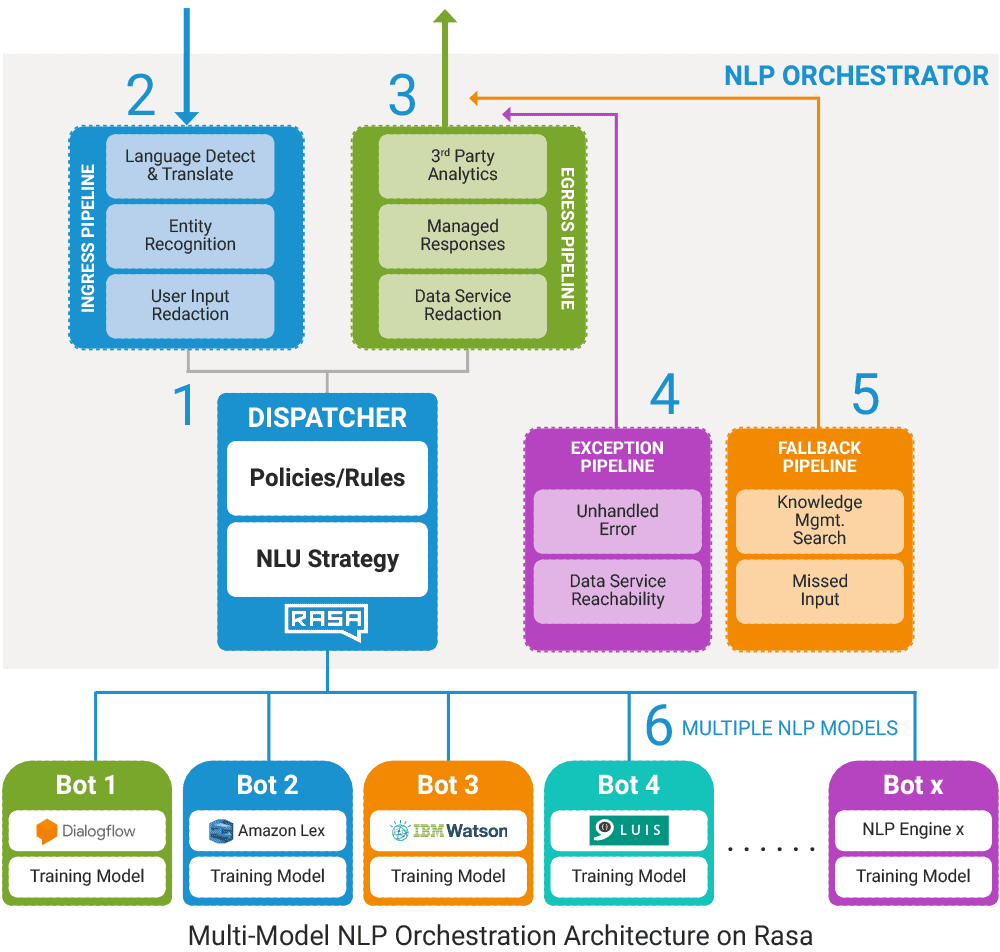

Components of The Bot Orchestrator

This is the brain behind the routing and orchestration and contains an AI/ML model that automatically learns and improves as more data flows through the system. It can apply a variety of different strategies based on the problem to be solved and the number or diversity of the underlying intent detection engines. It ships with “first past the post”, “parallel xxxx” “random forest” and other models and also allows the definition of policies and rules for handling different situations. It can be deployed to either AWS Sagemaker or Rasa.

The ingress pipeline allows a range of pre-processing features to be applied prior to the data being received by the Dispatcher. These range from security features, such as automatic redaction of sensitive data like credit card numbers and social security numbers to language detection and translation, real-time sentiment analysis, format conversion (speech to text, text to speech), entity detection, and other pre-processing applications.

The egress pipeline reviews all data leaving the Dispatcher to prevent inadvertent data leakage from the organization, i.e. “oversharing”. It can also translate language or convert back to the original language. The egress pipeline also allows integration with third-party analytics and other post-processing activities.

The exception pipeline handles errors that are not related to the NLP, for example, if the NLP is not reachable for technical reasons or if a required data service cannot be reached or is unavailable. This pipeline allows you to customize how to respond to unusual, often technical issues and communicate to the customer how it will handle it (e.g. “try later”, “report a technical problem”, “pass to a human”…)

The fallback pipeline is there to handle the situation when the customer utterance can’t be answered by any of the bots i.e. a missed utterance or intent. This is often a message asking the user to rephrase or it can involve escalating to a human agent. As an additional feature, the fallback pipeline can include knowledge management search to try and help the customer, even if the NLP has not seen the request before.

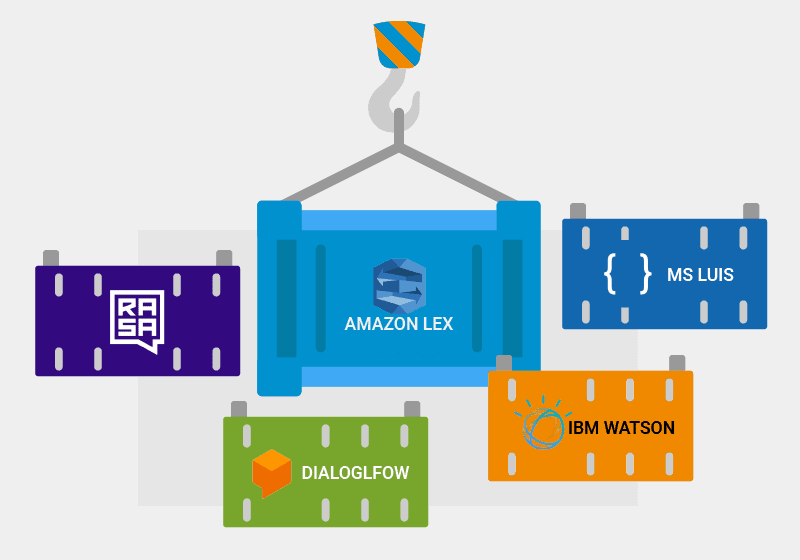

The ServisBOT platform supports all the major public NLP engines on the market today. We created a common definition format for bots which allows users to download the definition and training data from one NLP engine and upload it to another engine. This allows maximum portability and re-use, ensuring that any third-party bot developed on the public NLP engines can be imported into the ServisBOT environment and added to the multi-model orchestrator.

Overcome NLP Limitations when Creating Advanced Bot Solutions

Many NLP engines have limitations on the number of unique intents that they support while others require larger amounts of data and trained data scientists (so larger budgets) to support increasing numbers of intents. The multi-bot orchestrator can extend the number of intents by at least 2 orders of magnitude (102=100) allowing more topics, more variations in customer queries, more complex problems without the large budgets required for similar monolithic solutions.

Mix and Match Different NLP Engines

Each of the many NLP engines has advantages and disadvantages. Some are easy for casual users to get started with while others require trained data scientists. One or two can handle numbers and special characters better than others. Some are good for the English language only while others support multiple native languages (including Arabic and Chinese). The ability to mix and match these engines depending on the problem that needs to be solved ensures maximum flexibility and low vendor lock-in.

Interoperability and Portability of your Bots

Being able to combine and match bots developed on several NLP engines to work together in a single interaction with the client is one of the main advantages of the multi-model architecture. Additionally, it embraces the Common Bot Definition Format, which enables the so-called lift-and-shift of individual chatbots from one NLP engine to the other, such as from Amazon Lex to Microsoft Luis, or from Google DialogFlow to IBM Watson. Making it a lot easier to understand the effectiveness and precision of several NLP engines and to assign workloads to the engine that is most appropriate for the application.

Protect Unwanted Data from Entering/Leaving the Business

Protecting PII, PCI, and PHI data can be tricky when customers enter data like social security numbers, credit card numbers, or other unprompted personal information that is sensitive. Using ingress and egress pipelines, the multi-bot orchestrator constantly monitors and redacts information in realtime to avoid customers or employees oversharing information that shouldn’t be visible without proper authentication, encryption, or context.