Advanced AI Solutions and LLM Apps for the Enterprise

AI Assistants

AI Assistants can engage with your customers via voice, chat or email with higher levels of accuracy and personalization. These customer-facing LLM Apps engage using natural language but also automate workflows and tasks, relieving human agents to focus on more complex customer issues.

AI Copilots

AI Assistants can engage with your customers via voice, chat or email with higher levels of accuracy and personalization. These customer-facing LLM Apps engage using natural language but also automate workflows and tasks, relieving human agents to focus on more complex customer issues.

AI Agents

AI or digital agents can work autonomously on defined tasks and workflows. These LLM Apps are highly task-oriented, performing advanced automation at scale. For example, they can analyze vast datasets to identify risks, summarize lengthy reports, or process documents, without human intervention.

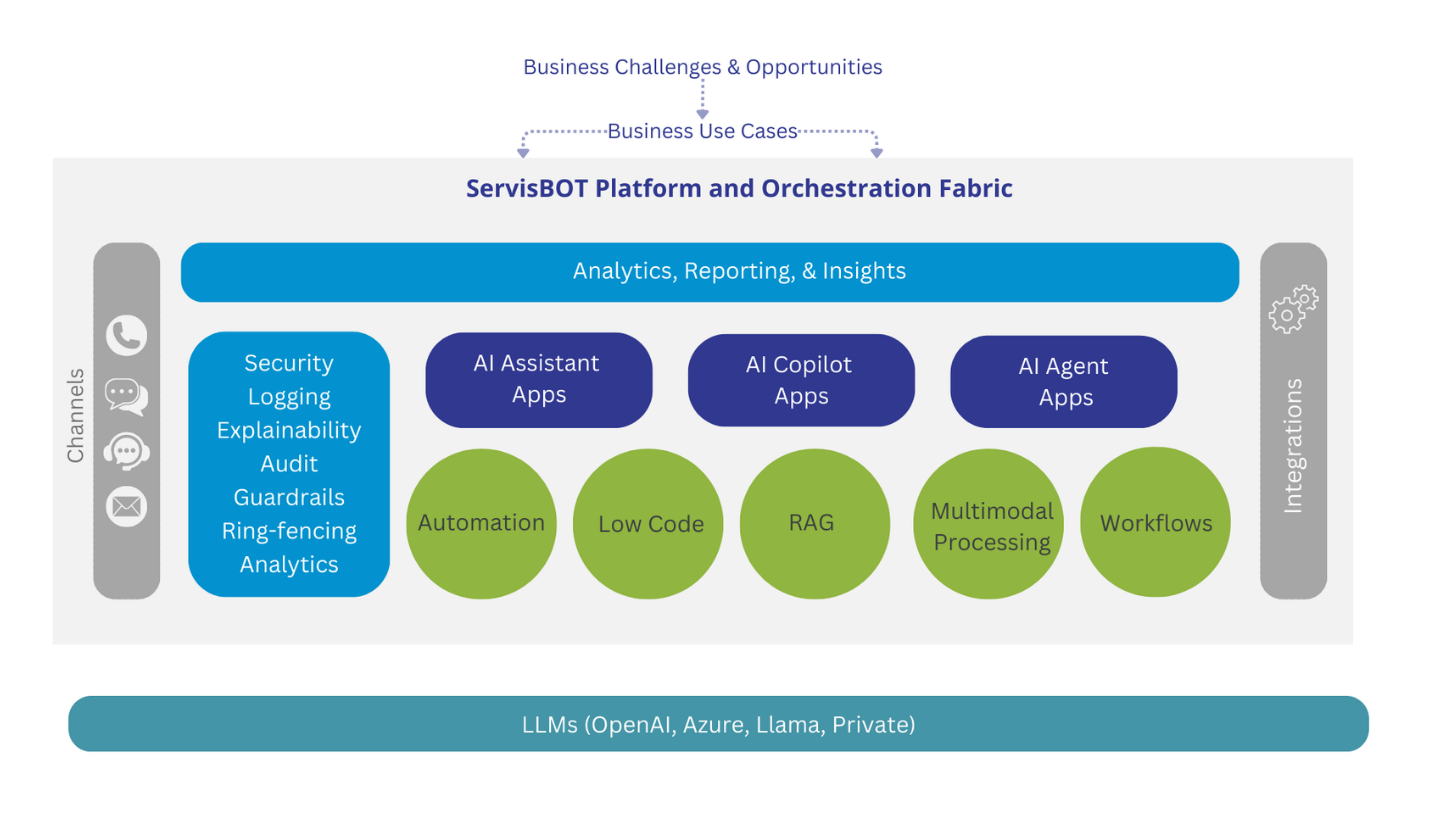

Low code tools on our platform help get these LLM apps built and deployed quickly, providing a fast return on investment while putting the necessary safeguards and AI security framework in place to give you peace of mind.

ServisBOT LLM App Platform

AI Security Framework

Security, Reliability, & Traceability

Security, reliability, and traceability are essential elements to safeguard data, maintain trust, and ensure dependable outputs from the AI systems used in business environments. Our LLM apps are focused on maximizing security by protecting business data and putting safeguards in place to prevent abuse, scope creep, and inherent inaccuracies of LLM models.

Our solutions are designed to deliver consistent and accurate performance, even in the face of the inherent variability of LLM models. By providing traceability of responses to their data sources and including references, we provide a means of building trust in the solution. Our platform is focused on creating consistency and reliability of responses, ensuring reduced error rates and low latency to guarantee dependable and timely responses for your business applications.

Integration & Ingestion Service

Integrating & Ingesting your Data and Process Know-how

Our Integration service handles the complexities of processing structured and unstructured enterprise data through APIs. It allows the ingestion and handling of other business assets, such as knowledge bases, employee manuals, and process knowhow safely and with adherence to your data processing policies. This automated service monitors and manages your enterprise data, helping extract the embedded value and ensuring it remains current and relevant.

Managed Service for Public & Private LLMs

Flexibility to Leverage the Right LLM for your Use Case

Our Managed Service allows for the use of multiple LLMs, public or private, evaluating the best fit for tasks such as multimodal processing, security, and document handling. The service optimizes costs, latency, and reliability through model arbitrage and token management.

With a broad choice of LLM models, it avoids vendor lock-in and manages costs effectively. The service also includes feedback loops to enhance quality, observability to track performance, and staying updated with innovations to guide decisions. This ensures optimal output and prevents users from inadvertently increasing costs.

Using LLM Apps for Delivering Insights

AI Insights Help Make Better Decisions

The power of LLMs to analyze large datasets and deliver real-time, multimodal insights and categorization (from voice, text, and images) without the need for structured data is game changing. Unconstrained by traditional database technologies, information from multiple sources and vast business datasets can be quickly synthesized to provide best possible responses and to identify topics, patterns, and anomalies, for faster and better decision-making.

Human language-based semantic search can also freely query and uncover actionable insights in real-time. These capabilities increase business agility and responsiveness to risk management, new business opportunities, and strategic decisions.

Let Us Help you Get Started Quickly

Ideas & Use Cases

Understand LLM Potential

Generative Ideas

Prioritize Easy Wins

Business Case

Develop ROI Analysis

Assess Risks

ID Security Concerns

Planning

Create Project Plan

Assign Resources

ID Metrics & Report Card

Implementation

Build MVP

Iterate to Hit Metrics

Rollout and Train

Review

Business Outcomes

LLM Performance

Goals Achieved

Communicate Results